Fake Lanes Fool TESLA A.I. to drive into oncoming traffic

Researchers from Tencent Keen Security Lab have published a report detailing their successful attacks on Tesla firmware, including remote control over the steering, and an adversarial example attack on the autopilot that confuses the car into driving into the oncoming traffic lane.

The researchers used an attack chain that they disclosed to Tesla, and which Tesla now claims has been eliminated with recent patches.

To effect the remote steering attack, the researchers had to bypass several redundant layers of protection, but having done this, they were able to write an app that would let them connect a video-game controller to a mobile device and then steer a target vehicle, overriding the actual steering wheel in the car as well as the autopilot systems. This attack has some limitations: while a car in Park or traveling at high speed on Cruise Control can be taken over completely, a car that has recently shifted from R to D can only be remote controlled at speeds up to 8km/h.

Tesla vehicles use a variety of neural networks for autopilot and other functions (such as detecting rain on the windscreen and switching on the wipers); the researchers were able to use adversarial examples (small, mostly human-imperceptible changes that cause machine learning systems to make gross, out-of-proportion errors) to attack these.

Most dramatically, the researchers attacked the autopilot’s lane-detection systems. By adding noise to lane-markings, they were able to fool the autopilot into losing the lanes altogether, however, the patches they had to apply to the lane-markings would not be hard for humans to spot.

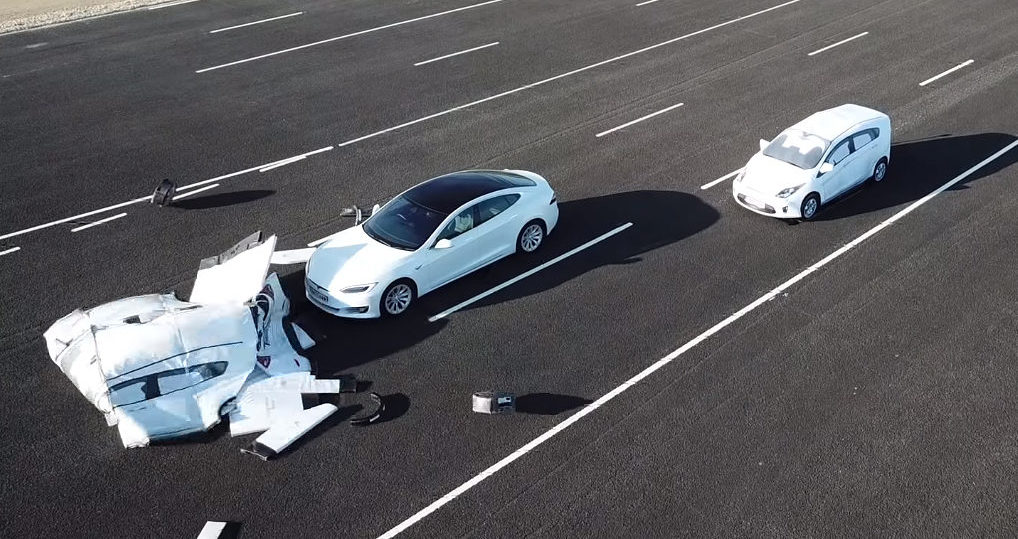

Much more seriously, they were able to use “small stickers” on the ground to effect a “fake lane attack” that fooled the autopilot into steering into the opposite lanes where oncoming traffic would be moving. This worked even when the targeted vehicle was operating in daylight without snow, dust or other interference.

Misleading the autopilot vehicle to the wrong direction with some patches made by a malicious attacker, in sometimes, is more dangerous than making it fail to recognize the lane. We paint three inconspicuous tiny square in the picture took from camera, and the vision module would recognize it as a lane with a high degree of confidence as below shows…

After that we tried to build such a scene in physical: we pasted some small stickers as interference patches on the ground in an intersection. We hope to use these patches toguide the Tesla vehicle in the Autosteer mode driving to the reverse lane. The test scenario like Fig 34 shows, red dashes are the stickers, the vehicle would regard them as the continuation of its right lane, and ignore the real left lane opposite the intersection. When it travels to the middle of the intersection, it would take the real left lane as its right lane and drive into the reverse lane.

Tesla autopilot module’s lane recognition function has a good robustness in an ordinary external environment (no strong light, rain, snow, sand and dust interference), but it still doesn’t handle the situation correctly in our test scenario. This kind of attack is simple to deploy, and the materials are easy to obtain. As we talked in the previous introduction of Tesla’s lane recognition function, Tesla uses a pure computer vision solution for lane recognition, and we found in this attack experiment that the vehicle driving decision is only based on computer vision lane recognition results.

Our experiments proved that this architecture has security risks and reverse lane recognition is one of the necessary functions for autonomous driving in non-closed roads. In the scene we build, if the vehicle knows that the fake lane is pointing to the reverse lane, it should ignore this fake lane and then it could avoid a traffic accident.

Security Research of Tesla Autopilot [Tencent Keen Security Lab]